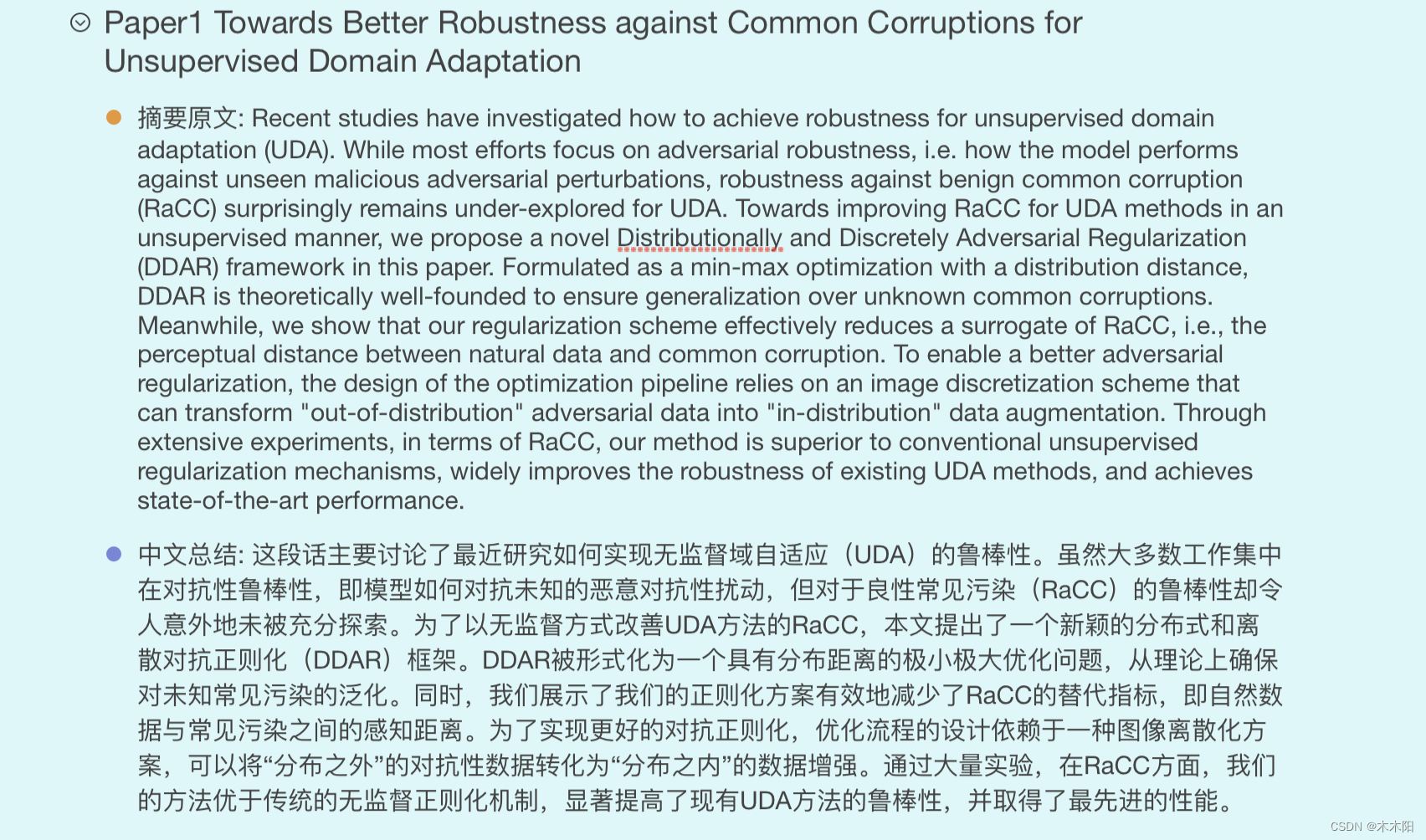

Paper1 Towards Better Robustness against Common Corruptions for Unsupervised Domain Adaptation

摘要原文: Recent studies have investigated how to achieve robustness for unsupervised domain adaptation (UDA). While most efforts focus on adversarial robustness, i.e. how the model performs against unseen malicious adversarial perturbations, robustness against benign common corruption (RaCC) surprisingly remains under-explored for UDA. Towards improving RaCC for UDA methods in an unsupervised manner, we propose a novel Distributionally and Discretely Adversarial Regularization (DDAR) framework in this paper. Formulated as a min-max optimization with a distribution distance, DDAR is theoretically well-founded to ensure generalization over unknown common corruptions. Meanwhile, we show that our regularization scheme effectively reduces a surrogate of RaCC, i.e., the perceptual distance between natural data and common corruption. To enable a better adversarial regularization, the design of the optimization pipeline relies on an image discretization scheme that can transform “out-of-distribution” adversarial data into “in-distribution” data augmentation. Through extensive experiments, in terms of RaCC, our method is superior to conventional unsupervised regularization mechanisms, widely improves the robustness of existing UDA methods, and achieves state-of-the-art performance.

中文总结: 这段话主要讨论了最近研究如何实现无监督域自适应(UDA)的鲁棒性。虽然大多数工作集中在对抗性鲁棒性,即模型如何对抗未知的恶意对抗性扰动,但对于良性常见污染(RaCC)的鲁棒性却令人意外地未被充分探索。为了以无监督方式改善UDA方法的RaCC,本文提出了一个新颖的分布式和离散对抗正则化(DDAR)框架。DDAR被形式化为一个具有分布距离的极小极大优化问题,从理论上确保对未知常见污染的泛化。同时,我们展示了我们的正则化方案有效地减少了RaCC的替代指标,即自然数据与常见污染之间的感知距离。为了实现更好的对抗正则化,优化流程的设计依赖于一种图像离散化方案,可以将“分布之外”的对抗性数据转化为“分布之内”的数据增强。通过大量实验,在RaCC方面,我们的方法优于传统的无监督正则化机制,显著提高了现有UDA方法的鲁棒性,并取得了最先进的性能。

Paper2 Occ^2Net: Robust Image Matching Based on 3D Occupancy Estimation for Occluded Regions

摘要原文: Image matching is a fundamental and critical task in various visual applications, such as Simultaneous Localization and Mapping (SLAM) and image retrieval, which require accurate pose estimation. However, most existing methods ignore the occlusion relations between objects caused by camera motion and scene structure.

In this paper, we propose Occ^2Net, a novel image matching method that models occlusion relations using 3D occupancy and infers matching points in occluded regions.

Thanks to the inductive bias encoded in the Occupancy Estimation (OE) module, it greatly simplifies bootstrapping of a multi-view consistent 3D representation that can then integrate information from multiple views. Together with an Occlusion-Aware (OA) module, it incorporates attention layers and rotation alignment to enable matching between occluded and visible points.

We evaluate our method on both real-world and simulated datasets and demonstrate its superior performance over state-of-the-art methods on several metrics, especially in occlusion scenarios.

中文总结: 这段话主要讨论了图像匹配在各种视觉应用中的重要性,如同时定位与地图构建(SLAM)和图像检索,这些应用需要准确的姿态估计。然而,大多数现有方法忽略了由摄像机运动和场景结构引起的对象之间的遮挡关系。本文提出了一种新颖的图像匹配方法Occ^2Net,该方法利用3D占用关系来建模遮挡关系,并推断遮挡区域中的匹配点。通过占用估计(OE)模块中编码的归纳偏差,它极大地简化了多视图一致的3D表示的引导过程,从而可以整合来自多个视图的信息。结合遮挡感知(OA)模块,它结合了注意力层和旋转对齐,实现了遮挡和可见点之间的匹配。我们在真实世界和模拟数据集上评估了我们的方法,并展示了它在几个指标上优于现有方法的性能,特别是在遮挡场景中。

Paper3 On the Robustness of Open-World Test-Time Training: Self-Training with Dynamic Prototype Expansion

摘要原文: Generalizing deep learning models to unknown target domain distribution with low latency has motivated research into test-time training/adaptation (TTT/TTA). Existing approaches often focus on improving test-time training performance under well-curated target domain data. As figured out in this work, many state-of-the-art methods fail to maintain the performance when the target domain is contaminated with strong out-of-distribution (OOD) data, a.k.a. open-world test-time training (OWTTT). The failure is mainly due to the inability to distinguish strong OOD samples from regular weak OOD samples. To improve the robustness of OWTTT we first develop an adaptive strong OOD pruning which improves the efficacy of the self-training TTT method. We further propose a way to dynamically expand the prototypes to represent strong OOD samples for an improved weak/strong OOD data separation. Finally, we regularize self-training with distribution alignment and the combination yields the state-of-the-art performance on 5 OWTTT benchmarks. The code is available at https://github.com/Yushu-Li/OWTTT.

中文总结: 这段话主要讨论了将深度学习模型泛化到未知目标领域分布并在低延迟下进行测试时间训练/适应(TTT/TTA)的研究动机。现有方法通常专注于在精心策划的目标领域数据下改善测试时间训练性能。然而,正如本文所指出的,许多最先进的方法在目标领域受到强烈的超出分布(OOD)数据污染时无法保持性能,即开放世界测试时间训练(OWTTT)。这种失败主要是由于无法区分强大的OOD样本和常规的弱OOD样本。为了提高OWTTT的鲁棒性,首先开发了自适应强OOD修剪,提高了自训练TTT方法的效力。我们进一步提出了一种动态扩展原型的方法,以代表强OOD样本,从而改善弱/强OOD数据的分离。最后,我们通过分布对齐对自训练进行正则化,结合在5个OWTTT基准测试上实现了最先进的性能。代码可在https://github.com/Yushu-Li/OWTTT上找到。

Paper4 3D-Aware Neural Body Fitting for Occlusion Robust 3D Human Pose Estimation

摘要原文: Regression-based methods for 3D human pose estimation directly predict the 3D pose parameters from a 2D image using deep networks. While achieving state-of-the-art performance on standard benchmarks, their performance degrades under occlusion. In contrast, optimization-based methods fit a parametric body model to 2D features in an iterative manner. The localized reconstruction loss can potentially make them robust to occlusion, but they suffer from the 2D-3D ambiguity. Motivated by the recent success of generative models in rigid object pose estimation, we propose 3D-aware Neural Body Fitting (3DNBF) - an approximate analysis-by-synthesis approach to 3D human pose estimation with SOTA performance and occlusion robustness. In particular, we propose a generative model of deep features based on a volumetric human representation with Gaussian ellipsoidal kernels emitting 3D pose-dependent feature vectors. The neural features are trained with contrastive learning to become 3D-aware and hence to overcome the 2D-3D ambiguity. Experiments show that 3DNBF outperforms other approaches on both occluded and standard benchmarks.

中文总结: 这段话主要介绍了基于回归的方法用于3D人体姿势估计,直接使用深度网络从2D图像中预测3D姿势参数。尽管在标准基准测试中取得了最先进的性能,但在遮挡情况下性能会下降。相比之下,基于优化的方法通过迭代地将参数化身体模型拟合到2D特征上。局部重建损失潜在地使它们对遮挡具有鲁棒性,但它们受到2D-3D模糊性的影响。受到生成模型在刚性物体姿势估计中的最近成功的启发,我们提出了3D感知神经身体拟合(3DNBF)-一种近似的分析合成方法,用于3D人体姿势估计,具有最先进的性能和遮挡鲁棒性。具体而言,我们提出了一个基于体积人体表示的深度特征生成模型,其中使用发射3D姿势相关特征向量的高斯椭球核。神经特征经过对比学习训练,变得具有3D感知性,从而克服了2D-3D模糊性。实验证明,3DNBF在遮挡和标准基准测试中均优于其他方法。

Paper5 EPiC: Ensemble of Partial Point Clouds for Robust Classification

摘要原文: Robust point cloud classification is crucial for real-world applications,as consumer-type 3D sensors often yield partial and noisy data, degraded by various artifacts. In this work we propose a general ensemble framework, based on partial point cloud sampling. Each ensemble member is exposed to only partial input data. Three sampling strategies are used jointly, two local ones, based on patches and curves, and a global one of random sampling. We demonstrate the robustness of our method to various local and global degradations. We show that our framework significantly improves the robustness of top classification netowrks by a large margin. Our experimental setting uses the recently introduced ModelNet-C database by Ren et al., where we reach SOTA both on unaugmented and on augmented data. Our unaugmented mean Corruption Error (mCE) is 0.64 (current SOTA is 0.86) and 0.50 for augmented data (current SOTA is 0.57). We analyze and explain these remarkable results through diversity analysis. Our code is availabe at: https://github.com/yossilevii100/EPiC

中文总结: 这段话主要介绍了点云分类在现实世界应用中的重要性,因为消费级3D传感器通常产生部分和嘈杂的数据,受各种伪影影响而下降质量。作者提出了一个基于部分点云采样的通用集成框架,每个集成成员只暴露于部分输入数据。使用三种采样策略:两种基于局部的,基于补丁和曲线,以及一种全局的随机采样。作者展示了他们的方法对各种局部和全局降级的鲁棒性。作者展示了他们的框架显著提高了顶级分类网络的鲁棒性。实验使用了Ren等人最近引入的ModelNet-C数据库,在未增强和增强数据上均达到了最先进水平。未增强数据的平均错误率为0.64(当前最先进水平为0.86),增强数据为0.50(当前最先进水平为0.57)。作者通过多样性分析分析和解释了这些显著的结果。他们的代码可在以下链接找到:https://github.com/yossilevii100/EPiC。

Paper6 Deformer: Dynamic Fusion Transformer for Robust Hand Pose Estimation

摘要原文: Accurately estimating 3D hand pose is crucial for understanding how humans interact with the world. Despite remarkable progress, existing methods often struggle to generate plausible hand poses when the hand is heavily occluded or blurred. In videos, the movements of the hand allow us to observe various parts of the hand that may be occluded or blurred in a single frame. To adaptively leverage the visual clue before and after the occlusion or blurring for robust hand pose estimation, we propose the Deformer: a framework that implicitly reasons about the relationship between hand parts within the same image (spatial dimension) and different timesteps (temporal dimension). We show that a naive application of the transformer self-attention mechanism is not sufficient because motion blur or occlusions in certain frames can lead to heavily distorted hand features and generate imprecise keys and queries. To address this challenge, we incorporate a Dynamic Fusion Module into Deformer, which predicts the deformation of the hand and warps the hand mesh predictions from nearby frames to explicitly support the current frame estimation. Furthermore, we have observed that errors are unevenly distributed across different hand parts, with vertices around fingertips having disproportionately higher errors than those around the palm. We mitigate this issue by introducing a new loss function called maxMSE that automatically adjusts the weight of every vertex to focus the model on critical hand parts. Extensive experiments show that our method significantly outperforms state-of-the-art methods by 10%, and is more robust to occlusions (over 14%).

中文总结: 准确估计3D手部姿势对于理解人类如何与世界互动至关重要。尽管取得了显著进展,但现有方法在手部被严重遮挡或模糊时往往难以生成合理的手部姿势。在视频中,手部的运动使我们能够观察到单帧中可能被遮挡或模糊的手部各个部分。为了适应地利用遮挡或模糊前后的视觉线索进行稳健的手部姿势估计,我们提出了Deformer:这是一个框架,它隐含地推理同一图像中(空间维度)和不同时间步长(时间维度)内手部部分之间的关系。我们发现,简单应用Transformer自注意机制是不够的,因为某些帧中的运动模糊或遮挡可能导致手部特征严重扭曲,产生不精确的键和查询。为了解决这一挑战,我们在Deformer中引入了一个动态融合模块,该模块预测手部的变形,并将来自相邻帧的手部网格预测进行扭曲,以明确支持当前帧的估计。此外,我们观察到错误在不同手部部位之间分布不均匀,指尖周围的顶点比手掌周围的顶点具有更高的错误率。我们通过引入一种名为maxMSE的新损失函数来缓解这个问题,该函数自动调整每个顶点的权重,以便模型专注于关键手部部位。大量实验证明,我们的方法在性能上显著优于最先进的方法10%,对遮挡的鲁棒性也更强(超过14%)。

Paper7 Towards Robust Model Watermark via Reducing Parametric Vulnerability

摘要原文: Deep neural networks are valuable assets considering their commercial benefits and huge demands for costly annotation and computation resources. To protect the copyright of DNNs, backdoor-based ownership verification becomes popular recently, in which the model owner can watermark the model by embedding a specific backdoor behavior before releasing it. The defenders (usually the model owners) can identify whether a suspicious third-party model is “stolen” from them based on the presence of the behavior. Unfortunately, these watermarks are proven to be vulnerable to removal attacks even like fine-tuning. To further explore this vulnerability, we investigate the parametric space and find there exist many watermark-removed models in the vicinity of the watermarked one, which may be easily used by removal attacks. Inspired by this finding, we propose a minimax formulation to find these watermark-removed models and recover their watermark behavior. Extensive experiments demonstrate that our method improves the robustness of the model watermarking against parametric changes and numerous watermark-removal attacks. The codes for reproducing our main experiments are available at https://github.com/GuanhaoGan/robust-model-watermarking.

中文总结: 这段话主要讨论了深度神经网络在商业上的重要性以及对昂贵的标注和计算资源的巨大需求。为了保护深度神经网络的版权,最近流行起基于后门的所有权验证方法,即模型所有者可以在发布之前通过嵌入特定的后门行为来为模型加水印。防御者(通常是模型所有者)可以根据这种行为的存在来确定可疑的第三方模型是否“盗用”了他们的模型。不幸的是,这些水印被证明容易受到移除攻击的影响,甚至像微调这样的操作也能移除水印。为了进一步探讨这种脆弱性,我们调查了参数空间,并发现在水印模型附近存在许多可能被轻松用于移除攻击的去水印模型。受到这一发现的启发,我们提出了一个极小极大的公式来找到这些去水印模型并恢复它们的水印行为。大量实验表明,我们的方法提高了模型水印对参数变化和众多去水印攻击的鲁棒性。我们的主要实验的代码可在https://github.com/GuanhaoGan/robust-model-watermarking 上找到。

Paper8 Learning Robust Representations with Information Bottleneck and Memory Network for RGB-D-based Gesture Recognition

摘要原文: Abstract not available

中文总结: 抱歉,无法提供对没有提供的摘要进行翻译。

Paper9 ACTIVE: Towards Highly Transferable 3D Physical Camouflage for Universal and Robust Vehicle Evasion

摘要原文: Adversarial camouflage has garnered attention for its ability to attack object detectors from any viewpoint by covering the entire object’s surface. However, universality and robustness in existing methods often fall short as the transferability aspect is often overlooked, thus restricting their application only to a specific target with limited performance. To address these challenges, we present Adversarial Camouflage for Transferable and Intensive Vehicle Evasion (ACTIVE), a state-of-the-art physical camouflage attack framework designed to generate universal and robust adversarial camouflage capable of concealing any 3D vehicle from detectors. Our framework incorporates innovative techniques to enhance universality and robustness, including a refined texture rendering that enables common texture application to different vehicles without being constrained to a specific texture map, a novel stealth loss that renders the vehicle undetectable, and a smooth and camouflage loss to enhance the naturalness of the adversarial camouflage. Our extensive experiments on 15 different models show that ACTIVE consistently outperforms existing works on various public detectors, including the latest YOLOv7. Notably, our universality evaluations reveal promising transferability to other vehicle classes, tasks (segmentation models), and the real world, not just other vehicles.

中文总结: 对抗伪装因其能够通过覆盖整个物体表面从任意视角攻击目标检测器而引起关注。然而,现有方法中的普适性和鲁棒性往往不足,因为往往忽视了可转移性方面,从而限制了它们仅适用于特定目标且性能有限。为了解决这些挑战,我们提出了一种新的物理伪装攻击框架——具有可转移性和强度的对抗伪装(ACTIVE),旨在生成能够掩盖任何3D车辆免受检测器侦测的通用和鲁棒的对抗伪装。我们的框架融入了创新技术来增强普适性和鲁棒性,包括精细的纹理渲染,使得可以将常见纹理应用于不同车辆而不受特定纹理映射的限制,一种新颖的隐身损失,使车辆不可检测,以及一种平滑和伪装损失来增强对抗伪装的自然性。我们对15种不同车型进行了广泛实验,结果表明ACTIVE在各种公共检测器上始终优于现有作品,包括最新的YOLOv7。值得注意的是,我们的普适性评估显示了对其他车辆类别、任务(分割模型)和现实世界的有望转移性,而不仅仅是对其他车辆。

Paper10 Towards Nonlinear-Motion-Aware and Occlusion-Robust Rolling Shutter Correction

摘要原文: This paper addresses the problem of rolling shutter correction in complex nonlinear and dynamic scenes with extreme occlusion. Existing methods suffer from two main drawbacks. Firstly, they face challenges in estimating the

accurate correction field due to the uniform velocity assumption, leading to significant image correction errors under complex motion. Secondly, the drastic occlusion in dynamic scenes prevents current solutions from achieving better image quality because of the inherent difficulties in aligning and aggregating multiple frames. To tackle these challenges, we model the curvilinear trajectory of pixels analytically and propose a geometry-based Quadratic Rolling Shutter (QRS) motion solver, which precisely estimates the high-order correction field of individual pixels. Besides, to reconstruct high-quality occlusion frames in dynamic scenes, we present a 3D video architecture that effectively Aligns and Aggregates multi-frame context, namely, RSA2-Net. We evaluate our method across a broad range of cameras and video sequences, demonstrating its significant superiority. Specifically, our method surpasses the state-of-the-art by +4.98, +0.77, and +4.33 of PSNR on Carla-RS, Fastec-RS, and BS-RSC datasets, respectively. Code is available at https://github.com/DelinQu/qrsc.

中文总结: 本文解决了在复杂非线性和动态场景中具有极端遮挡的滚动快门校正问题。现有方法存在两个主要缺点。首先,它们在估计准确的校正场时面临挑战,这是由于对均匀速度假设,导致在复杂运动下出现显著的图像校正错误。其次,在动态场景中的剧烈遮挡阻止了当前解决方案在图像质量上取得更好的结果,因为在对齐和聚合多帧时存在固有的困难。为了解决这些挑战,我们通过分析地模拟像素的曲线轨迹,提出了基于几何的二次滚动快门(QRS)运动求解器,精确估计单个像素的高阶校正场。此外,为了在动态场景中重建高质量的遮挡帧,我们提出了一种有效对齐和聚合多帧上下文的3D视频架构,即RSA2-Net。我们在广泛的相机和视频序列上评估了我们的方法,展示了其显著的优越性。具体而言,我们的方法在Carla-RS、Fastec-RS和BS-RSC数据集上的PSNR分别比现有技术高出+4.98、+0.77和+4.33。代码可在https://github.com/DelinQu/qrsc 上找到。

Paper11 ROME: Robustifying Memory-Efficient NAS via Topology Disentanglement and Gradient Accumulation

摘要原文: Albeit being a prevalent architecture searching approach, differentiable architecture search (DARTS) is largely hindered by its substantial memory cost since the entire supernet resides in the memory. This is where the single-path DARTS comes in, which only chooses a single-path submodel at each step. While being memory-friendly, it also comes with low computational costs. Nonetheless, we discover a critical issue of single-path DARTS that has not been primarily noticed. Namely, it also suffers from severe performance collapse since too many parameter-free operations like skip connections are derived, just like DARTS does. In this paper, we propose a new algorithm called RObustifying Memory-Efficient NAS (ROME) to give a cure. First, we disentangle the topology search from the operation search to make searching and evaluation consistent. We then adopt Gumbel-Top2 reparameterization and gradient accumulation to robustify the unwieldy bi-level optimization. We verify ROME extensively across 15 benchmarks to demonstrate its effectiveness and robustness.

中文总结: 尽管可微架构搜索(DARTS)是一种流行的架构搜索方法,但由于整个超网络驻留在内存中,其显著的内存成本大大限制了其发展。这就是单路径DARTS的出现原因,它在每一步只选择一个单路径子模型。虽然这种方法友好于内存,同时也具有较低的计算成本。然而,我们发现了单路径DARTS存在一个关键问题,即性能严重下降,因为会产生太多无参数操作,如跳跃连接,就像DARTS一样。在本文中,我们提出了一种名为RObustifying Memory-Efficient NAS(ROME)的新算法来解决这个问题。首先,我们将拓扑搜索与操作搜索分离,使搜索和评估保持一致。然后,我们采用Gumbel-Top2重参数化和梯度累积来加强难以控制的双层优化。我们在15个基准测试中广泛验证了ROME的有效性和稳健性。

Paper12 Cross Modal Transformer: Towards Fast and Robust 3D Object Detection

摘要原文: In this paper, we propose a robust 3D detector, named Cross Modal Transformer (CMT), for end-to-end 3D multi-modal detection. Without explicit view transformation, CMT takes the image and point clouds tokens as inputs and directly outputs accurate 3D bounding boxes. The spatial alignment of multi-modal tokens is performed by encoding the 3D points into multi-modal features. The core design of CMT is quite simple while its performance is impressive. It achieves 74.1% NDS (state-of-the-art with single model) on nuScenes test set while maintaining faster inference speed. Moreover, CMT has a strong robustness even if the LiDAR is missing. Code is released at https: //github.com/junjie18/CMT .

中文总结: 本文提出了一种名为Cross Modal Transformer (CMT)的强大的3D检测器,用于端到端的3D多模态检测。在没有显式视图转换的情况下,CMT将图像和点云令牌作为输入,并直接输出准确的3D边界框。通过将3D点编码为多模态特征来执行多模态令牌的空间对齐。CMT的核心设计非常简单,但性能令人印象深刻。它在nuScenes测试集上实现了74.1%的NDS(单模型的最新技术水平),同时保持更快的推理速度。此外,即使缺少LiDAR,CMT也具有很强的鲁棒性。代码已发布在https://github.com/junjie18/CMT。

Paper13 CRN: Camera Radar Net for Accurate, Robust, Efficient 3D Perception

摘要原文: Autonomous driving requires an accurate and fast 3D perception system that includes 3D object detection, tracking, and segmentation. Although recent low-cost camera-based approaches have shown promising results, they are susceptible to poor illumination or bad weather conditions and have a large localization error. Hence, fusing camera with low-cost radar, which provides precise long-range measurement and operates reliably in all environments, is promising but has not yet been thoroughly investigated. In this paper, we propose Camera Radar Net (CRN), a novel camera-radar fusion framework that generates a semantically rich and spatially accurate bird’s-eye-view (BEV) feature map for various tasks. To overcome the lack of spatial information in an image, we transform perspective view image features to BEV with the help of sparse but accurate radar points. We further aggregate image and radar feature maps in BEV using multi-modal deformable attention designed to tackle the spatial misalignment between inputs. CRN with real-time setting operates at 20 FPS while achieving comparable performance to LiDAR detectors on nuScenes, and even outperforms at a far distance on 100m setting. Moreover, CRN with offline setting yields 62.4% NDS, 57.5% mAP on nuScenes test set and ranks first among all camera and camera-radar 3D object detectors.

中文总结: 这段话主要讨论了自动驾驶需要准确快速的3D感知系统,包括3D物体检测、跟踪和分割。近期低成本的基于摄像头的方法表现出了良好的结果,但容易受到光照不足或恶劣天气条件的影响,并且存在较大的定位误差。因此,将摄像头与低成本雷达融合在一起,雷达提供精确的长距离测量并在所有环境下可靠运行,这是一个有前途的方向,但尚未得到深入研究。在这篇论文中,我们提出了Camera Radar Net (CRN),这是一个新颖的摄像头-雷达融合框架,用于生成丰富语义和空间准确的鸟瞰特征图,用于各种任务。为了克服图像中空间信息的缺失,我们利用稀疏但准确的雷达点将透视图图像特征转换为鸟瞰视图。我们进一步在鸟瞰视图中使用多模态可变形注意力聚合图像和雷达特征图,以解决输入之间的空间错位问题。CRN在实时设置下以20FPS运行,同时在nuScenes上实现了与LiDAR检测器可比较的性能,并且在100m设置下在较远距离上表现更好。此外,CRN在离线设置下在nuScenes测试集上获得了62.4%的NDS,57.5%的mAP,并在所有摄像头和摄像头-雷达3D物体检测器中排名第一。

Paper14 HybridAugment++: Unified Frequency Spectra Perturbations for Model Robustness

摘要原文: Convolutional Neural Networks (CNN) are known to exhibit poor generalization performance under distribution shifts. Their generalization have been studied extensively, and one line of work approaches the problem from a frequency-centric perspective. These studies highlight the fact that humans and CNNs might focus on different frequency components of an image. First, inspired by these observations, we propose a simple yet effective data augmentation method HybridAugment that reduces the reliance of CNNs on high-frequency components, and thus improves their robustness while keeping their clean accuracy high. Second, we propose HybridAugment++, which is a hierarchical augmentation method that attempts to unify various frequency-spectrum augmentations. HybridAugment++ builds on HybridAugment, and also reduces the reliance of CNNs on the amplitude component of images, and promotes phase information instead. This unification results in competitive to or better than state-of-the-art results on clean accuracy (CIFAR-10/100 and ImageNet), corruption benchmarks (ImageNet-C, CIFAR-10-C and CIFAR-100-C), adversarial robustness on CIFAR-10 and out-of-distribution detection on various datasets. HybridAugment

and HybridAugment++ are implemented in a few lines of code, does not require extra data, ensemble models or additional networks.

中文总结: 这段话主要内容是关于卷积神经网络(CNN)在分布转移下表现出较差的泛化性能。人们已经对它们的泛化性能进行了广泛研究,其中一种方法是从频率中心的角度来解决这个问题。这些研究强调了人类和CNN可能会关注图像的不同频率成分。首先,受这些观察启发,作者提出了一种简单而有效的数据增强方法HybridAugment,减少了CNN对高频组件的依赖,从而提高了它们的鲁棒性,同时保持了它们的准确性。其次,作者提出了HybridAugment++,这是一种层次化的增强方法,试图统一各种频谱增强。HybridAugment++建立在HybridAugment的基础上,还减少了CNN对图像幅度分量的依赖,促进了相位信息。这种统一导致了在干净准确性(CIFAR-10/100和ImageNet)、损坏基准(ImageNet-C、CIFAR-10-C和CIFAR-100-C)、CIFAR-10上的对抗鲁棒性和各种数据集上的外部分布检测方面具有与最先进结果相媲美甚至更好的结果。HybridAugment和HybridAugment++可以用几行代码实现,不需要额外的数据、集成模型或额外的网络。

Paper15 Uncertainty Guided Adaptive Warping for Robust and Efficient Stereo Matching

摘要原文: Correlation based stereo matching has achieved outstanding performance, which pursues cost volume between two feature maps. Unfortunately, current methods with a fixed trained model do not work uniformly well across various datasets, greatly limiting their real-world applicability. To tackle this issue, this paper proposes a new perspective to dynamically calculate correlation for robust stereo matching. A novel Uncertainty Guided Adaptive Correlation (UGAC) module is introduced to robustly adapt the same model for different scenarios. Specifically, a variance-based uncertainty estimation is employed to adaptively adjust the sampling area during warping operation. Additionally, we improve the traditional non-parametric warping with learnable parameters, such that the position-specific weights can be learned. We show that by empowering the recurrent network with the UGAC module, stereo matching can be exploited more robustly and effectively. Extensive experiments demonstrate that our method achieves state-of-the-art performance over the ETH3D, KITTI, and Middlebury datasets when employing the same fixed model over these datasets without any retraining procedure. To target real-time applications, we further design a lightweight model based on UGAC, which also outperforms other methods over KITTI benchmarks with only 0.6 M parameters.

中文总结: 基于相关性的立体匹配已经取得了出色的性能,它追求两个特征图之间的成本体积。然而,目前使用固定训练模型的方法在各种数据集上并不一致地表现良好,极大地限制了它们在实际应用中的适用性。为了解决这个问题,本文提出了一种新的视角来动态计算相关性以实现鲁棒的立体匹配。引入了一种新颖的基于不确定性引导的自适应相关性(UGAC)模块,以便在不同场景下鲁棒地调整相同模型。具体来说,采用基于方差的不确定性估计来自适应地调整在变形操作期间的采样区域。此外,我们改进了传统的非参数变形方法,引入了可学习参数,使得可以学习位置特定的权重。我们展示了通过为循环网络赋予UGAC模块,立体匹配可以更加鲁棒和有效地利用。大量实验证明,我们的方法在ETH3D、KITTI和Middlebury数据集上实现了最先进的性能,而且在这些数据集上使用相同的固定模型而无需任何重新训练过程。为了针对实时应用,我们进一步设计了一个基于UGAC的轻量级模型,仅使用0.6M参数就能在KITTI基准测试中胜过其他方法。

Paper16 Robo3D: Towards Robust and Reliable 3D Perception against Corruptions

摘要原文: The robustness of 3D perception systems under natural corruptions from environments and sensors is pivotal for safety-critical applications. Existing large-scale 3D perception datasets often contain data that are meticulously cleaned. Such configurations, however, cannot reflect the reliability of perception models during the deployment stage. In this work, we present Robo3D, the first comprehensive benchmark heading toward probing the robustness of 3D detectors and segmentors under out-of-distribution scenarios against natural corruptions that occur in real-world environments. Specifically, we consider eight corruption types stemming from severe weather conditions, external disturbances, and internal sensor failure. We uncover that, although promising results have been progressively achieved on standard benchmarks, state-of-the-art 3D perception models are at risk of being vulnerable to corruptions. We draw key observations on the use of data representations, augmentation schemes, and training strategies, that could severely affect the model’s performance. To pursue better robustness, we propose a density-insensitive training framework along with a simple flexible voxelization strategy to enhance the model resiliency. We hope our benchmark and approach could inspire future research in designing more robust and reliable 3D perception models. Our robustness benchmark suite is publicly available.

中文总结: 这段话主要讨论了在自然环境和传感器中出现的各种干扰情况下,3D感知系统的稳健性对于安全关键应用至关重要。现有的大规模3D感知数据集通常包含经过精心清理的数据。然而,这种配置不能反映感知模型在部署阶段的可靠性。作者提出了Robo3D,这是第一个全面的基准测试,旨在探究3D检测器和分割器在真实世界环境中发生的自然干扰情况下的稳健性。具体来说,作者考虑了来自恶劣天气条件、外部干扰和内部传感器故障的八种干扰类型。作者发现,尽管在标准基准测试上逐渐取得了令人满意的结果,但当前最先进的3D感知模型面临着受到干扰的风险。作者对数据表示、增强方案和训练策略的使用进行了关键观察,这可能严重影响模型的性能。为了追求更好的稳健性,作者提出了一种密度无关的训练框架以及一个简单灵活的体素化策略,以增强模型的韧性。作者希望他们的基准测试和方法能够激发未来研究,设计更加稳健可靠的3D感知模型。他们的稳健性基准测试套件是公开可用的。

Paper17 Robust Evaluation of Diffusion-Based Adversarial Purification

摘要原文: We question the current evaluation practice on diffusion-based purification methods. Diffusion-based purification methods aim to remove adversarial effects from an input data point at test time. The approach gains increasing attention as an alternative to adversarial training due to the disentangling between training and testing. Well-known white-box attacks are often employed to measure the robustness of the purification. However, it is unknown whether these attacks are the most effective for the diffusion-based purification since the attacks are often tailored for adversarial training. We analyze the current practices and provide a new guideline for measuring the robustness of purification methods against adversarial attacks. Based on our analysis, we further propose a new purification strategy improving robustness compared to the current diffusion-based purification methods.

中文总结: 本段话主要讨论了对基于扩散的净化方法的当前评估实践进行质疑。基于扩散的净化方法旨在在测试时从输入数据点中消除对抗效应。这种方法因在训练和测试之间的区分而引起越来越多的关注,作为对抗性训练的替代方法。通常会使用知名的白盒攻击来衡量净化的鲁棒性。然而,目前尚不清楚这些攻击是否对基于扩散的净化最有效,因为这些攻击通常是针对对抗性训练而定制的。作者分析了当前的实践,并提供了一个新的指南,用于衡量净化方法对抗性攻击的鲁棒性。根据他们的分析,他们进一步提出了一种新的净化策略,相比当前的基于扩散的净化方法,能够提高鲁棒性。

Paper18 Efficiently Robustify Pre-Trained Models

摘要原文: A recent trend in deep learning algorithms has been towards training large scale models, having high parameter count and trained on big dataset. However, robustness of such large scale models towards real-world settings is still a less-explored topic. In this work, we first benchmark the performance of these models under different perturbations and datasets thereby representing real-world shifts, and highlight their degrading performance under these shifts. We then discuss on how complete model fine-tuning based existing robustification schemes might not be a scalable option given very large scale networks and can also lead them to forget some of the desired characterstics. Finally, we propose a simple and cost-effective method to solve this problem, inspired by knowledge transfer literature. It involves robustifying smaller models, at a lower computation cost, and then use them as teachers to tune a fraction of these large scale networks, reducing the overall computational overhead. We evaluate our proposed method under various vision perturbations including ImageNet-C,R,S,A datasets and also for transfer learning, zero-shot evaluation setups on different datasets. Benchmark results show that our method is able to induce robustness to these large scale models efficiently, requiring significantly lower time and also preserves the transfer learning, zero-shot properties of the original model which none of the existing methods are able to achieve.

中文总结: 这段话主要讨论了深度学习算法的一个最新趋势,即训练大规模模型,具有高参数数量,并在大型数据集上训练。然而,这些大规模模型在面对真实世界环境时的鲁棒性仍然是一个较少探讨的主题。作者首先对这些模型在不同扰动和数据集下的表现进行基准测试,代表真实世界的变化,并强调它们在这些变化下性能的下降。然后讨论了基于现有的鲁棒化方案进行完整模型微调可能不是一个可扩展的选项,因为大规模网络的规模很大,这样做还可能导致它们忘记一些期望的特征。最后,提出了一种简单且具有成本效益的方法来解决这个问题,灵感来自知识转移文献。这涉及对较小模型进行鲁棒化,以较低的计算成本,然后将它们用作教师来微调这些大规模网络的一部分,从而降低整体计算开销。作者在包括ImageNet-C、R、S、A数据集在内的各种视觉扰动以及在不同数据集上进行的迁移学习、零样本评估设置下评估了他们提出的方法。基准结果显示,他们的方法能够有效地使这些大规模模型具有鲁棒性,需要较低的时间,并且保留了原始模型的迁移学习、零样本特性,这是现有方法无法实现的。

Paper19 Robust e-NeRF: NeRF from Sparse & Noisy Events under Non-Uniform Motion

摘要原文: Event cameras offer many advantages over standard cameras due to their distinctive principle of operation: low power, low latency, high temporal resolution and high dynamic range. Nonetheless, the success of many downstream visual applications also hinges on an efficient and effective scene representation, where Neural Radiance Field (NeRF) is seen as the leading candidate. Such promise and potential of event cameras and NeRF inspired recent works to investigate on the reconstruction of NeRF from moving event cameras. However, these works are mainly limited in terms of the dependence on dense and low-noise event streams, as well as generalization to arbitrary contrast threshold values and camera speed profiles. In this work, we propose Robust e-NeRF, a novel method to directly and robustly reconstruct NeRFs from moving event cameras under various real-world conditions, especially from sparse and noisy events generated under non-uniform motion. It consists of two key components: a realistic event generation model that accounts for various intrinsic parameters (e.g. time-independent, asymmetric threshold and refractory period) and non-idealities (e.g. pixel-to-pixel threshold variation), as well as a complementary pair of normalized reconstruction losses that can effectively generalize to arbitrary speed profiles and intrinsic parameter values without such prior knowledge. Experiments on real and novel realistically simulated sequences verify our effectiveness. Our code, synthetic dataset and improved event simulator are public.

中文总结: 事件相机由于其独特的工作原理而具有许多优势,如低功耗、低延迟、高时间分辨率和高动态范围。然而,许多下游视觉应用的成功也取决于高效和有效的场景表示,其中神经辐射场(NeRF)被视为领先的候选者。事件相机和NeRF的这种潜力和潜力激发了最近的研究,探讨了从移动事件相机重建NeRF。然而,这些研究主要受限于对稠密和低噪声事件流的依赖,以及对任意对比度阈值值和相机速度配置文件的泛化。在这项工作中,我们提出了Robust e-NeRF,一种新颖的方法,可以在各种真实世界条件下,特别是在非均匀运动下从移动事件相机直接且稳健地重建NeRF。它包括两个关键组件:一个考虑各种固有参数(例如,与时间无关、不对称阈值和不可逆周期)和非理想性(例如,像素对像素阈值变化)的真实事件生成模型,以及一对互补的归一化重建损失,可以有效地泛化到任意速度配置文件和固有参数值,而无需此类先验知识。对真实和新颖的逼真模拟序列的实验验证了我们的有效性。我们的代码、合成数据集和改进的事件模拟器是公开的。

Paper20 PhaseMP: Robust 3D Pose Estimation via Phase-conditioned Human Motion Prior

摘要原文: We present a novel motion prior, called PhaseMP, modeling a probability distribution on pose transitions conditioned by a frequency domain feature extracted from a periodic autoencoder. The phase feature further enforces the pose transitions to be unidirectional (i.e. no backward movement in time), from which more stable and natural motions can be generated. Specifically, our motion prior can be useful for accurately estimating 3D human motions in the presence of challenging input data, including long periods of spatial and temporal occlusion, as well as noisy sensor measurements. Through a comprehensive evaluation, we demonstrate the efficacy of our novel motion prior, showcasing its superiority over existing state-of-the-art methods by a significant margin across various applications, including video-to-motion and motion estimation from sparse sensor data, and etc.

中文总结: 本文介绍了一种新颖的运动先验模型,称为PhaseMP,该模型建模了一个概率分布,该概率分布取决于从周期性自编码器中提取的频域特征。相位特征进一步强化了姿势转换是单向的(即在时间上没有向后运动),从而可以生成更稳定和自然的动作。具体来说,我们的运动先验对于在存在挑战性输入数据的情况下准确估计3D人体动作非常有用,包括长时间的空间和时间遮挡,以及嘈杂的传感器测量数据。通过全面评估,我们展示了我们的新颖运动先验的有效性,展示了其在各种应用中优于现有最先进方法的显著优势,包括从视频到动作和从稀疏传感器数据中估计动作等。

Paper21 Robust Referring Video Object Segmentation with Cyclic Structural Consensus

摘要原文: Referring Video Object Segmentation (R-VOS) is a challenging task that aims to segment an object in a video based on a linguistic expression. Most existing R-VOS methods have a critical assumption: the object referred to must appear in the video. This assumption, which we refer to as “semantic consensus”, is often violated in real-world scenarios, where the expression may be queried against false videos. In this work, we highlight the need for a robust R-VOS model that can handle semantic mismatches. Accordingly, we propose an extended task called Robust R-VOS (RRVOS), which accepts unpaired video-text inputs. We tackle this problem by jointly modeling the primary R-VOS problem and its dual (text reconstruction). A structural text-to-text cycle constraint is introduced to discriminate semantic consensus between video-text pairs and impose it in positive pairs, thereby achieving multi-modal alignment from both positive and negative pairs. Our structural constraint effectively addresses the challenge posed by linguistic diversity, overcoming the limitations of previous methods that relied on the point-wise constraint. A new evaluation dataset, RRYTVOS is constructed to measure the model robustness. Our model achieves state-of-the-art performance on R-VOS benchmarks, Ref-DAVIS17 and Ref-Youtube-VOS, and also our RRYTVOS dataset.

中文总结: 这段话主要讨论了视频目标分割(R-VOS)是一个具有挑战性的任务,旨在基于语言表达来对视频中的对象进行分割。大多数现有的R-VOS方法存在一个关键假设:所指的对象必须出现在视频中。这一假设被称为“语义共识”,在现实场景中经常被违反,因为查询的表达可能针对错误的视频。在这项工作中,强调了需要一个能够处理语义不匹配的强大R-VOS模型。因此,提出了一个名为Robust R-VOS(RRVOS)的扩展任务,它接受不成对的视频-文本输入。我们通过联合建模主要的R-VOS问题及其双重(文本重建)来解决这个问题。引入了结构化文本到文本的循环约束,以区分视频-文本对之间的语义共识,并在正对中施加它,从而实现正负对的多模态对齐。我们的结构约束有效地解决了语言多样性带来的挑战,克服了先前依赖点对约束的方法的局限性。我们构建了一个新的评估数据集RRYTVOS来衡量模型的鲁棒性。我们的模型在R-VOS基准测试Ref-DAVIS17和Ref-Youtube-VOS以及我们的RRYTVOS数据集上取得了最先进的性能。

Paper22 MoreauGrad: Sparse and Robust Interpretation of Neural Networks via Moreau Envelope

摘要原文: Explaining the predictions of deep neural nets has been a topic of great interest in the computer vision literature. While several gradient-based interpretation schemes have been proposed to reveal the influential variables in a neural net’s prediction, standard gradient-based interpretation frameworks have been commonly observed to lack robustness to input perturbations and flexibility for incorporating prior knowledge of sparsity and group-sparsity structures. In this work, we propose MoreauGrad as an interpretation scheme based on the classifier neural net’s Moreau envelope. We demonstrate that MoreauGrad results in a smooth and robust interpretation of a multi-layer neural network and can be efficiently computed through first-order optimization methods. Furthermore, we show that MoreauGrad can be naturally combined with L1-norm regularization techniques to output a sparse or group-sparse explanation which are prior conditions applicable to a wide range of deep learning applications. We empirically evaluate the proposed MoreauGrad scheme on standard computer vision datasets, showing the qualitative and quantitative success of the MoreauGrad approach in comparison to standard gradient-based interpretation methods.

中文总结: 解释深度神经网络预测已经成为计算机视觉文献中一个备受关注的话题。虽然已经提出了几种基于梯度的解释方案来揭示神经网络预测中的影响变量,但通常观察到标准基于梯度的解释框架在对输入扰动的鲁棒性和灵活性方面存在不足,以及在融合稀疏性和组稀疏性结构的先验知识方面也存在问题。在这项工作中,我们提出MoreauGrad作为一种基于分类器神经网络Moreau包络的解释方案。我们证明MoreauGrad可以实现对多层神经网络的平滑和稳健解释,并且可以通过一阶优化方法高效计算。此外,我们展示了MoreauGrad可以自然地与L1范数正则化技术结合,以输出适用于各种深度学习应用的稀疏或组稀疏解释。我们在标准计算机视觉数据集上对所提出的MoreauGrad方案进行了实证评估,展示了MoreauGrad方法相对于标准基于梯度的解释方法在定性和定量上的成功。

Paper23 OCHID-Fi: Occlusion-Robust Hand Pose Estimation in 3D via RF-Vision

摘要原文: Hand Pose Estimation (HPE) is crucial to many applications, but conventional cameras-based CM-HPE methods are completely subject to Line-of-Sight (LoS), as cameras cannot capture occluded objects. In this paper, we propose to exploit Radio-Frequency-Vision (RF-vision) capable of bypassing obstacles for achieving occluded HPE, and we introduce OCHID-Fi as the first RF-HPE method with 3D pose estimation capability. OCHID-Fi employs wideband RF sensors widely available on smart devices (e.g., iPhones) to probe 3D human hand pose and extract their skeletons behind obstacles. To overcome the challenge in labeling RF imaging given its human incomprehensible nature, OCHID-Fi employs a cross-modality and cross-domain training process. It uses a pre-trained CM-HPE network and a synchronized CM/RF dataset, to guide the training of its complex-valued RF-HPE network under LoS conditions. It further transfers knowledge learned from labeled LoS domain to unlabeled occluded domain via adversarial learning, enabling OCHID-Fi to generalize to unseen occluded scenarios. Experimental results demonstrate the superiority of OCHID-Fi: it achieves comparable accuracy to CM-HPE under normal conditions while maintaining such accuracy even in occluded scenarios, with empirical evidence for its generalizability to new domains.

中文总结: 手部姿势估计(HPE)对许多应用至关重要,但传统基于摄像头的CM-HPE方法完全受到视线的限制,因为摄像头无法捕捉被遮挡的物体。在本文中,我们提出利用能够绕过障碍物的射频视觉(RF-vision)来实现被遮挡的HPE,并介绍了OCHID-Fi作为第一个具有3D姿势估计能力的RF-HPE方法。OCHID-Fi利用智能设备(例如iPhone)上广泛可用的宽带射频传感器来探测3D人手姿势并提取其骨架,即使在障碍物后面也能实现。为了克服标记射频成像的挑战,因为其人类难以理解的特性,OCHID-Fi采用了跨模态和跨领域的训练过程。它利用预训练的CM-HPE网络和同步的CM/RF数据集,来引导其在视线条件下训练复杂值射频-HPE网络。它进一步通过对抗学习将从标记的视线领域学到的知识转移到未标记的被遮挡领域,使OCHID-Fi能够推广到未见过的被遮挡场景。实验结果表明OCHID-Fi的优越性:在正常条件下达到与CM-HPE相当的准确度,同时在被遮挡的情况下仍保持这种准确度,为其在新领域的泛化提供了经验证据。

Paper24 Robust Frame-to-Frame Camera Rotation Estimation in Crowded Scenes

摘要原文: We present an approach to estimating camera rotation in crowded, real-world scenes from handheld monocular video. While camera rotation estimation is a well-studied problem, no previous methods exhibit both high accuracy and acceptable speed in this setting. Because the setting is not addressed well by other datasets, we provide a new dataset and benchmark, with high-accuracy, rigorously verified ground truth, on 17 video sequences. Methods developed for wide baseline stereo (e.g., 5-point methods) perform poorly on monocular video. On the other hand, methods used in autonomous driving (e.g., SLAM) leverage specific sensor setups, specific motion models, or local optimization strategies (lagging batch processing) and do not generalize well to handheld video. Finally, for dynamic scenes, commonly used robustification techniques like RANSAC require large numbers of iterations, and become prohibitively slow. We introduce a novel generalization of the Hough transform on SO(3) to efficiently and robustly find the camera rotation most compatible with optical flow. Among comparably fast methods, ours reduces error by almost 50% over the next best, and is more accurate than any method, irrespective of speed. This represents a strong new performance point for crowded scenes, an important setting for computer vision. The code and the dataset are available at https://fabiendelattre.com/robust-rotation-estimation.

中文总结: 这段话主要讲述了一种从手持单目视频中估计拥挤真实场景中相机旋转的方法。尽管相机旋转估计是一个经过深入研究的问题,但以往的方法在这种情况下既没有高精度又无法达到可接受的速度。由于其他数据集未能很好地解决这一问题,因此作者提供了一个新的数据集和基准测试,包括17个视频序列,具有高精度、经过严格验证的地面真实数据。在单目视频中,宽基线立体视觉方法(例如5点法)表现不佳。另一方面,用于自动驾驶的方法(例如SLAM)利用特定的传感器设置、特定的运动模型或局部优化策略(滞后批处理),无法很好地推广到手持视频。最后,对于动态场景,常用的鲁棒化技术如RANSAC需要大量迭代次数,变得速度过慢。作者提出了一种新颖的SO(3)上的Hough变换的泛化方法,可以高效且鲁棒地找到与光流最兼容的相机旋转。在速度相当快的方法中,作者的方法将误差减少了近50%,比其他任何方法都更准确,无论速度如何。这代表了拥挤场景的一个强大的新性能点,对于计算机视觉是一个重要的场景。代码和数据集可在https://fabiendelattre.com/robust-rotation-estimation上获取。

Paper25 Robust Mixture-of-Expert Training for Convolutional Neural Networks

摘要原文: Sparsely-gated Mixture of Expert (MoE), an emerging deep model architecture, has demonstrated a great promise to enable high-accuracy and ultra-efficient model inference. Despite the growing popularity of MoE, little work investigated its potential to advance convolutional neural networks (CNNs), especially in the plane of adversarial robustness. Since the lack of robustness has become one of the main hurdles for CNNs, in this paper we ask: How to adversarially robustify a CNN-based MoE model? Can we robustly train it like an ordinary CNN model? Our pilot study shows that the conventional adversarial training (AT) mechanism (developed for vanilla CNNs) no longer remains effective to robustify an MoE-CNN. To better understand this phenomenon, we dissect the robustness of an MoE-CNN into two dimensions: Robustness of routers (i.e., gating functions to select data-specific experts) and robustness of experts (i.e., the router-guided pathways defined by the subnetworks of the backbone CNN). Our analyses show that routers and experts are hard to adapt to each other in the vanilla AT. Thus, we propose a new router-expert alternating Adversarial training framework for MoE, termed AdvMoE. The effectiveness of our proposal is justified across 4 commonly-used CNN model architectures over 4 benchmark datasets. We find that AdvMoE achieves 1% 4% adversarial robustness improvement over the original dense CNN, and enjoys the efficiency merit of sparsity-gated MoE, leading to more than 50% inference cost reduction.

中文总结: 这段话主要讨论了稀疏门控专家混合(MoE)作为一种新兴的深度模型架构,展示了在实现高准确性和超高效率模型推断方面的巨大潜力。尽管MoE越来越受欢迎,但很少有研究探讨其在推进卷积神经网络(CNNs)特别是在对抗鲁棒性方面的潜力。由于缺乏鲁棒性已成为CNNs的主要障碍之一,因此在本文中我们提出了一个问题:如何使基于CNN的MoE模型具有对抗鲁棒性?我们是否可以像普通CNN模型一样稳健地训练它?我们的初步研究表明,传统的对抗训练(AT)机制(为普通CNNs开发的)不再有效地使MoE-CNN具有鲁棒性。为了更好地理解这一现象,我们将MoE-CNN的鲁棒性分解为两个维度:路由器的鲁棒性(即选择数据特定专家的门控函数)和专家的鲁棒性(即由骨干CNN的子网络定义的路由器引导的路径)。我们的分析表明,在普通AT中,路由器和专家很难相互适应。因此,我们提出了一种新的MoE的路由器-专家交替对抗训练框架,称为AdvMoE。我们的提议在4个常用的CNN模型架构和4个基准数据集上得到了验证。我们发现,AdvMoE相对于原始密集CNN实现了1%至4%的对抗鲁棒性改进,并且享有稀疏门控MoE的效率优势,推断成本降低了50%以上。

Paper26 Why Is Prompt Tuning for Vision-Language Models Robust to Noisy Labels?

摘要原文: Vision-language models such as CLIP learn a generic text-image embedding from large-scale training data. A vision-language model can be adapted to a new classification task through few-shot prompt tuning. We find that such prompt tuning process is highly robust to label noises. This intrigues us to study the key reasons contributing to the robustness of the prompt tuning paradigm. We conducted extensive experiments to explore this property and find the key factors are: 1. the fixed classname tokens provide a strong regularization to the optimization of the model, reducing gradients induced by the noisy samples; 2. the powerful pre-trained image-text embedding that is learned from diverse and generic web data provides strong prior knowledge for image classification. Further, we demonstrate that noisy zero-shot predictions from CLIP can be used to tune its own prompt, significantly enhancing prediction accuracy in the unsupervised setting.

中文总结: 这段话主要讨论了视觉-语言模型(如CLIP)通过大规模训练数据学习通用的文本-图像嵌入。视觉-语言模型可以通过少样本提示调整适应新的分类任务。研究发现,这种提示调整过程对标签噪声非常稳健。这激发了我们研究提示调整范式稳健性的关键原因。我们进行了大量实验来探索这一特性,并发现关键因素是:1. 固定的类名标记为模型的优化提供了强大的正则化作用,减少了噪声样本引起的梯度;2. 从多样化和通用网络数据中学习到的强大的预训练图像-文本嵌入为图像分类提供了强大的先验知识。此外,我们证明了从CLIP产生的有噪声的零样本预测可以用于调整其自身的提示,显著提高了在无监督环境中的预测准确性。

Paper27 GlueStick: Robust Image Matching by Sticking Points and Lines Together

摘要原文: Line segments are powerful features complementary to points. They offer structural cues, robust to drastic viewpoint and illumination changes, and can be present even in texture-less areas. However, describing and matching them is more challenging compared to points due to partial occlusions, lack of texture, or repetitiveness. This paper introduces a new matching paradigm, where points, lines, and their descriptors are unified into a single wireframe structure. We propose GlueStick, a deep matching Graph Neural Network (GNN) that takes two wireframes from different images and leverages the connectivity information between nodes to better glue them together. In addition to the increased efficiency brought by the joint matching, we also demonstrate a large boost of performance when leveraging the complementary nature of these two features in a single architecture. We show that our matching strategy outperforms the state-of-the-art approaches independently matching line segments and points for a wide variety of datasets and tasks. Code is available at https://github.com/cvg/GlueStick.

中文总结: 这段话主要介绍了线段作为点的有力补充特征,具有结构线索、对剧烈视角和光照变化具有鲁棒性,甚至可以存在于无纹理区域。然而,由于部分遮挡、缺乏纹理或重复性,描述和匹配线段比点更具挑战性。该论文提出了一种新的匹配范式,将点、线段及其描述符统一为单一的线框结构。作者提出了GlueStick,一种深度匹配图神经网络(GNN),它接受来自不同图像的两个线框,并利用节点之间的连接信息更好地将它们粘合在一起。除了联合匹配带来的效率提升外,我们还展示了在单一架构中利用这两种特征的互补性带来的性能大幅提升。我们表明,我们的匹配策略在各种数据集和任务中优于独立匹配线段和点的最先进方法。源代码可在https://github.com/cvg/GlueStick 找到。

Paper28 Explaining Adversarial Robustness of Neural Networks from Clustering Effect Perspective

摘要原文: Adversarial training (AT) is the most commonly used mechanism to improve the robustness of deep neural networks. Recently, a novel adversarial attack against intermediate layers exploits the extra fragility of adversarially trained networks to output incorrect predictions. The result implies the insufficiency in the searching space of the adversarial perturbation in adversarial training. To straighten out the reason for the effectiveness of the intermediate-layer attack, we interpret the forward propagation as the Clustering Effect, characterizing that the intermediate-layer representations of neural networks for samples i.i.d. to the training set with the same label are similar, and we theoretically prove the existence of Clustering Effect by corresponding Information Bottleneck Theory. We afterward observe that the intermediate-layer attack disobeys the clustering effect of the AT-trained model. Inspired by these significant observations, we propose a regularization method to extend the perturbation searching space during training, named sufficient adversarial training (SAT). We give a proven robustness bound of neural networks through rigorous mathematical proof. The experimental evaluations manifest the superiority of SAT over other state-of-the-art AT mechanisms in defending against adversarial attacks against both output and intermediate layers. Our code and Appendix can be found at https://github.com/clustering-effect/SAT.

中文总结: 对抗训练(AT)是改善深度神经网络鲁棒性最常用的机制。最近,一种新的针对中间层的对抗攻击利用对抗训练网络额外脆弱性,导致输出错误预测。这一结果意味着对抗训练中对对抗扰动搜索空间的不足。为了解释中间层攻击有效性的原因,我们将前向传播解释为聚类效应,表明神经网络的中间层表示对于训练集中标签相同的独立同分布样本是相似的,并通过信息瓶颈理论在理论上证明了聚类效应的存在。我们随后观察到中间层攻击违反了AT训练模型的聚类效应。受到这些重要观察的启发,我们提出了一种正则化方法,在训练期间扩展扰动搜索空间,命名为充分对抗训练(SAT)。我们通过严格的数学证明给出了神经网络的稳健性界限。实验评估显示,SAT在防御对输出和中间层的对抗攻击方面优于其他最先进的AT机制。我们的代码和附录可以在https://github.com/clustering-effect/SAT找到。

Paper29 Semi-supervised Semantics-guided Adversarial Training for Robust Trajectory Prediction

摘要原文: Predicting the trajectories of surrounding objects is a critical task for self-driving vehicles and many other autonomous systems. Recent works demonstrate that adversarial attacks on trajectory prediction, where small crafted perturbations are introduced to history trajectories, may significantly mislead the prediction of future trajectories and induce unsafe planning. However, few works have addressed enhancing the robustness of this important safety-critical task. In this paper, we present a novel adversarial training method for trajectory prediction. Compared with typical adversarial training on image tasks, our work is challenged by more random input with rich context and a lack of class labels. To address these challenges, we propose a method based on a semi-supervised adversarial autoencoder, which models disentangled semantic features with domain knowledge and provides additional latent labels for the adversarial training. Extensive experiments with different types of attacks demonstrate that our Semisupervised Semantics-guided Adversarial Training (SSAT) method can effectively mitigate the impact of adversarial attacks by up to 73% and outperform other popular defense methods. In addition, experiments show that our method can significantly improve the system’s robust generalization to unseen patterns of attacks. We believe that such semantics-guided architecture and advancement on robust generalization is an important step for developing robust prediction models and enabling safe decision-making.

中文总结: 本文讨论了对自动驾驶车辆和其他自主系统来说,预测周围物体轨迹是一项关键任务。最近的研究表明,对轨迹预测进行敌对攻击,即向历史轨迹引入小的精心设计的扰动,可能会严重误导未来轨迹的预测,并导致不安全的规划。然而,很少有研究涉及增强这一重要的安全关键任务的鲁棒性。本文提出了一种新颖的轨迹预测敌对训练方法。与典型的图像任务上的敌对训练相比,我们的工作受到更多随机输入和丰富上下文以及缺乏类别标签的挑战。为了解决这些挑战,我们提出了一种基于半监督敌对自动编码器的方法,该方法利用领域知识建模了解耦的语义特征,并为敌对训练提供额外的潜在标签。大量实验证明,我们的半监督语义引导的敌对训练(SSAT)方法可以有效地减轻敌对攻击的影响,最多可达73%,并且优于其他流行的防御方法。此外,实验证明我们的方法可以显著提高系统对未见攻击模式的鲁棒泛化能力。我们认为,这种语义引导的架构和对鲁棒泛化的进展是发展鲁棒预测模型并实现安全决策制定的重要一步。

Paper30 RLSAC: Reinforcement Learning Enhanced Sample Consensus for End-to-End Robust Estimation

摘要原文: Robust estimation is a crucial and still challenging task, which involves estimating model parameters in noisy environments. Although conventional sampling consensus-based algorithms sample several times to achieve robustness, these algorithms cannot use data features and historical information effectively. In this paper, we propose RLSAC, a novel Reinforcement Learning enhanced SAmple Consensus framework for end-to-end robust estimation. RLSAC employs a graph neural network to utilize both data and memory features to guide exploring directions for sampling the next minimum set. The feedback of downstream tasks serves as the reward for unsupervised training. Therefore, RLSAC can avoid differentiating to learn the features and the feedback of downstream tasks for end-to-end robust estimation. In addition, RLSAC integrates a state transition module that encodes both data and memory features. Our experimental results demonstrate that RLSAC can learn from features to gradually explore a better hypothesis. Through analysis, it is apparent that RLSAC can be easily transferred to other sampling consensus-based robust estimation tasks. To the best of our knowledge, RLSAC is also the first method that uses reinforcement learning to sample consensus for end-to-end robust estimation. We

release our codes at https://github.com/IRMVLab/RLSAC.

中文总结: 这段话主要介绍了一个名为RLSAC的新型强化学习增强的样本共识框架,用于端到端的鲁棒估计。传统的采样共识算法需要多次采样以实现鲁棒性,但这些算法不能有效地利用数据特征和历史信息。RLSAC利用图神经网络来利用数据和记忆特征,引导探索下一个最小集的采样方向。下游任务的反馈作为无监督训练的奖励,从而避免了为端到端的鲁棒估计学习特征和下游任务的反馈。此外,RLSAC还集成了一个状态转换模块,编码了数据和记忆特征。实验结果表明,RLSAC可以从特征中学习,逐渐探索更好的假设。通过分析,RLSAC可以轻松转移到其他基于采样共识的鲁棒估计任务。据我们所知,RLSAC也是第一个利用强化学习对端到端鲁棒估计进行采样共识的方法。我们在https://github.com/IRMVLab/RLSAC 上发布了我们的代码。

Paper31 Deep Fusion Transformer Network with Weighted Vector-Wise Keypoints Voting for Robust 6D Object Pose Estimation

摘要原文: One critical challenge in 6D object pose estimation from a single RGBD image is efficient integration of two different modalities, i.e., color and depth. In this work, we tackle this problem by a novel Deep Fusion Transformer (DFTr) block that can aggregate cross-modality features for improving pose estimation. Unlike existing fusion methods, the proposed DFTr can better model cross-modality semantic correlation by leveraging their semantic similarity, such that globally enhanced features from different modalities can be better integrated for improved information extraction. Moreover, to further improve robustness and efficiency, we introduce a novel weighted vector-wise voting algorithm that employs a non-iterative global optimization strategy for precise 3D keypoint localization while achieving near real-time inference. Extensive experiments show the effectiveness and strong generalization capability of our proposed 3D keypoint voting algorithm. Results on four widely used benchmarks also demonstrate that our method outperforms the state-of-the-art methods by large margins.

中文总结: 这段话主要讨论了从单个RGBD图像中进行6D物体姿态估计的一个关键挑战,即有效地整合两种不同的模态,即颜色和深度。作者通过提出的一种新颖的Deep Fusion Transformer (DFTr)模块来解决这个问题,该模块可以聚合跨模态特征以改善姿态估计。与现有的融合方法不同,所提出的DFTr可以更好地通过利用它们的语义相似性来建模跨模态语义相关性,从而更好地整合不同模态的全局增强特征以实现更好的信息提取。此外,为了进一步提高鲁棒性和效率,作者引入了一种新颖的加权向量投票算法,该算法采用非迭代的全局优化策略,以实现精确的3D关键点定位,并实现接近实时推断。大量实验证明了作者提出的3D关键点投票算法的有效性和强大的泛化能力。在四个广泛使用的基准测试上的结果也表明,作者的方法在很大程度上优于现有方法。

Paper32 Lip2Vec: Efficient and Robust Visual Speech Recognition via Latent-to-Latent Visual to Audio Representation Mapping

摘要原文: Visual Speech Recognition (VSR) differs from the common perception tasks as it requires deeper reasoning over the video sequence, even by human experts. Despite the recent advances in VSR, current approaches rely on labeled data to fully train or finetune their models predicting the target speech. This hinders their ability to generalize well beyond the training set and leads to performance degeneration under out-of-distribution challenging scenarios. Un-like previous works that involve auxiliary losses or com-plex training procedures and architectures, we propose a simple approach, named Lip2Vec that is based on learning a prior model. Given a robust visual speech encoder, this network maps the encoded latent representations of the lip sequence to their corresponding latents from the audio pair, which are sufficiently invariant for effective text decoding. The generated audio representation is then decoded to text using an off-the-shelf Audio Speech Recognition (ASR) model. The proposed model compares favorably with fully-supervised learning methods on the LRS3 dataset achieving 26 WER. Unlike SoTA approaches, our model keeps a rea-sonable performance on the VoxCeleb2-en test set. We believe that reprogramming the VSR as an ASR task narrows the performance gap between the two and paves the way for more flexible formulations of lip reading.

中文总结: 视觉语音识别(VSR)与常见的感知任务不同,因为它需要对视频序列进行更深入的推理,即使是对于人类专家也是如此。尽管VSR近年来取得了进展,但当前的方法仍然依赖于标记数据来完全训练或微调其模型以预测目标语音。这限制了它们在训练集之外泛化的能力,并导致在面对分布之外的挑战性场景时性能下降。与涉及辅助损失或复杂训练程序和架构的先前作品不同,我们提出了一种简单的方法,名为Lip2Vec,它基于学习一个先验模型。给定一个强大的视觉语音编码器,该网络将唇语序列的编码潜在表示映射到其对应的音频对的潜在表示,这些表示对于有效的文本解码是足够不变的。然后使用现成的音频语音识别(ASR)模型将生成的音频表示解码为文本。所提出的模型在LRS3数据集上与完全监督学习方法相比表现优异,实现了26的词错误率(WER)。与最先进的方法不同,我们的模型在VoxCeleb2-en测试集上保持了合理的性能。我们认为,将VSR重新编程为ASR任务缩小了两者之间的性能差距,并为唇读更灵活的制定奠定了基础。

Paper33 Enhancing Adversarial Robustness in Low-Label Regime via Adaptively Weighted Regularization and Knowledge Distillation

摘要原文: Adversarial robustness is a research area that has recently received a lot of attention in the quest for trustworthy artificial intelligence. However, recent works on adversarial robustness have focused on supervised learning where it is assumed that labeled data is plentiful. In this paper, we investigate semi -supervised adversarial training where labeled data is scarce. We derive two upper bounds for the robust risk and propose a regularization term for unlabeled data motivated by these two upper bounds. Then, we develop a semi-supervised adversarial training algorithm that combines the proposed regularization term with knowledge distillation using a semi-supervised teacher. Our experiments show that our proposed algorithm achieves state-of-the-art performance with significant margins compared to existing algorithms. In particular, compared to supervised learning algorithms, performance of our proposed algorithm is not much worse even when the amount of labeled data is very small. For example, our algorithm with only 8% labeled data is comparable to supervised adversarial training algorithms that use all labeled data, both in terms of standard and robust accuracies on CIFAR-10.

中文总结: 这段话主要讨论了对抗性鲁棒性在可信人工智能领域中的重要性,以及最近针对有监督学习的对抗性鲁棒性进行研究的现状。然后,文章探讨了半监督对抗训练,其中标记数据较少的情况。作者提出了两个鲁棒风险的上界,并提出了一种针对未标记数据的正则化项。随后,作者开发了一种结合了提出的正则化项和半监督教师知识蒸馏的半监督对抗训练算法。实验证明,作者提出的算法在性能上取得了与现有算法相比显著的优势。特别是,即使标记数据量很小,我们的算法在CIFAR-10数据集上的标准和鲁棒准确性方面也不比有监督学习算法差。例如,我们的算法仅使用8%的标记数据,与使用所有标记数据的有监督对抗训练算法相比,在CIFAR-10数据集上的性能表现相当。

Paper34 On the Robustness of Normalizing Flows for Inverse Problems in Imaging

摘要原文: Conditional normalizing flows can generate diverse image samples for solving inverse problems. Most normalizing flows for inverse problems in imaging employ the conditional affine coupling layer that can generate diverse images quickly. However, unintended severe artifacts are occasionally observed in the output of them. In this work, we address this critical issue by investigating the origins of these artifacts and proposing the conditions to avoid them. First of all, we empirically and theoretically reveal that these problems are caused by “exploding inverse” in the conditional affine coupling layer for certain out-of-distribution (OOD) conditional inputs. Then, we further validated that the probability of causing erroneous artifacts in pixels is highly correlated with a Mahalanobis distance-based OOD score for inverse problems in imaging. Lastly, based on our investigations, we propose a remark to avoid exploding inverse and then based on it, we suggest a simple remedy that substitutes the affine coupling layers with the modified rational quadratic spline coupling layers in normalizing flows, to encourage the robustness of generated image samples. Our experimental results demonstrated that our suggested methods effectively suppressed critical artifacts occurring in normalizing flows for super-resolution space generation and low-light image enhancement.

中文总结: 这段话的主要内容是:条件正规化流可以生成多样化的图像样本,用于解决逆问题。在成像中用于逆问题的大多数正规化流都采用了条件仿射耦合层,可以快速生成多样化的图像。然而,有时会观察到输出中出现意外严重的伪影。本研究通过调查这些伪影的起源并提出避免它们的条件来解决这一关键问题。首先,我们从经验和理论上揭示了这些问题是由于特定的超出分布(OOD)条件输入导致条件仿射耦合层中的“爆炸逆”引起的。然后,我们进一步验证了在成像逆问题中,导致像素出现错误伪影的概率与基于马氏距离的OOD评分高度相关。最后,基于我们的调查,我们提出了一个避免爆炸逆的建议,并基于此,我们建议将仿射耦合层替换为修改后的有理二次样条耦合层在正规化流中,以鼓励生成的图像样本的稳健性。我们的实验结果表明,我们提出的方法有效地抑制了在超分辨率空间生成和低光图像增强中出现的关键伪影。

Paper35 DEDRIFT: Robust Similarity Search under Content Drift

摘要原文: The statistical distribution of content uploaded and searched on media sharing sites changes over time due to seasonal, sociological and technical factors. We investigate the impact of this “content drift” for large-scale similarity search tools, based on nearest neighbor search in embedding space. Unless a costly index reconstruction is performed frequently, content drift degrades the search accuracy and efficiency. The degradation is especially severe since, in general, both the query and database distributions change.

We introduce and analyze real-world image and video datasets for which temporal information is available over a long time period. Based on the learnings, we devise DeDrift, a method that updates embedding quantizers to continuously adapt large-scale indexing structures on-the-fly. DeDrift almost eliminates the accuracy degradation due to the query and database content drift while being up to 100x faster than a full index reconstruction.

中文总结: 这段话主要讨论了媒体分享网站上上传和搜索的内容的统计分布随时间变化的原因,包括季节性、社会学和技术因素。研究了这种“内容漂移”对大规模相似度搜索工具的影响,这些工具基于嵌入空间中的最近邻搜索。除非经常进行昂贵的索引重建,否则内容漂移会降低搜索的准确性和效率。由于通常情况下查询和数据库分布都会发生变化,所以这种退化尤为严重。

研究人员介绍并分析了具有长时间跨度的可用于时间信息的真实图像和视频数据集。根据这些经验,他们设计了DeDrift,一种方法,该方法通过动态更新嵌入式量化器来不断适应大规模索引结构。DeDrift几乎消除了由于查询和数据库内容漂移而导致的准确性下降,同时比完全索引重建快多达100倍。

Paper36 Adversarial Finetuning with Latent Representation Constraint to Mitigate Accuracy-Robustness Tradeoff

摘要原文: This paper addresses the tradeoff between standard accuracy on clean examples and robustness against adversarial examples in deep neural networks (DNNs).

Although adversarial training (AT) improves robustness, it degrades the standard accuracy, thus yielding the tradeoff.

To mitigate this tradeoff, we propose a novel AT method called ARREST, which comprises three components: (i) adversarial finetuning (AFT), (ii) representation-guided knowledge distillation (RGKD), and (iii) noisy replay (NR).

AFT trains a DNN on adversarial examples by initializing its parameters with a DNN that is standardly pretrained on clean examples.

RGKD and NR respectively entail a regularization term and an algorithm to preserve latent representations of clean examples during AFT.

RGKD penalizes the distance between the representations of the standardly pretrained and AFT DNNs.

NR switches input adversarial examples to nonadversarial ones when the representation changes significantly during AFT.

By combining these components, ARREST achieves both high standard accuracy and robustness.

Experimental results demonstrate that ARREST mitigates the tradeoff more effectively than previous AT-based methods do.

![[go-zero] goctl 生成api和rpc](https://img-blog.csdnimg.cn/direct/031b51d3e4474d989c127df27371ac95.png)